We will now look at database design best practices to ensure that data access is not a bottleneck that negatively affects application performance.

What is the purpose of a good database design?

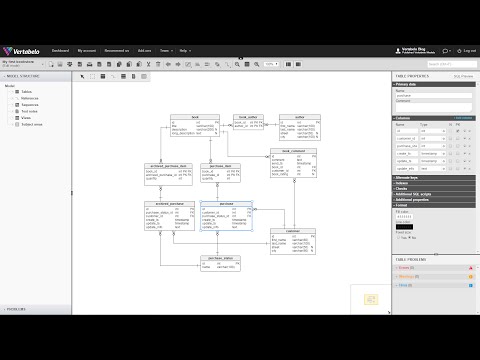

In addition to improving data access performance, a good design achieves other benefits, such as maintaining data consistency, accuracy, and reliability and reducing storage space by eliminating redundancies. Another benefit of good design is that the database is easier to use and maintain. Anyone who has to manage it will only need to look at the entity-relationship diagram (ERD) to understand its structure. ERDs are the fundamental tool of database design. They can be created and visualized at three levels of design: conceptual, logical, and physical. The conceptual design shows a very summarized diagram, with only the elements necessary to agree on criteria with the project stakeholders, who do not need to understand the technical details of the database. The logical design shows the entities and their relationships in detail but in a database-agnostic way. There are many tools you can use to facilitate the design of databases from ERDs. Among the best ones are DbSchema, SqlDBM, and Vertabelo.

DbSchema

DbSchema allows you to visually design and manage SQL, NoSQL, or Cloud databases. The tool allows you to design the schema on a computer and deploy it to multiple databases and generate documentation in HTML5 diagrams, write queries, and visually explore the data, among others. It also offers schema synchronization, random data generation, and SQL code editing with auto-completion.

SqlDBM

SqlDBM is one of the best database diagram design tools because it provides an easy way to design your database in any browser. No other database engine or modeling tools are required to use it, although SqlDBM allows you to import a schema from an existing database. It is ideal for teamwork, as it allows you to share design projects with co-workers.

Vertabelo

Vertabelo is an online visual database design tool that allows you to design a database logically and automatically derive the physical schema. It can reverse engineering, generate diagrams from existing databases, and control access to the diagrams by differentiating access privileges to owners, editors, and viewers. Finally, the physical design is the one that adds to the ERD all the necessary details to turn it into a usable database in a particular DBMS, such as MySQL, MariaDB, MS SQL Server, or any other. Let’s take a look at the best practices to keep in mind when designing an ERD so that the resulting database works at its best.

Define the type of database to design

Two fundamental types of databases are usually distinguished: relational and dimensional. Relational databases are used for traditional applications that execute transactions on the data – that is, they get information from the database, process it, and store the results. On the other hand, Dimensional databases are used for the creation of data warehouses: large repositories of information for data analysis and data mining to obtain insights. The first step in any database design task is to choose one of the two main database types to work with: relational or dimensional. It is vital to have this clear before you start designing. Otherwise, you can easily fall into design mistakes that will eventually lead to many problems and will be difficult (or impossible) to correct.

Adopting a Naming Convention

The names used in the database design are essential because, once an object is created in a database, changing its name can be fatal. Changing just one letter of the name can break dependencies, relationships, and even entire systems. That’s why it is critical to work with a healthy naming convention: a set of rules that saves you the trouble of trying 50 different possibilities to find the name of an object you can’t remember. There is no universal guide to what a naming convention should be to do its job. But the important thing is to establish a naming convention before naming any of the objects in a database and maintaining that convention forever. A naming convention establishes guidelines such as whether to use an underscore to separate words or to join them directly, whether to use all capital letters or capitalize words (Camel Case style), whether to use plural or singular words to name objects and so on.

Start with the conceptual design, then the logical design, and finally the physical design.

That’s the natural order of things. As a designer, you may be tempted to start by creating objects directly on the DBMS to skip steps. But this will prevent you from having a tool to discuss with stakeholders to ensure that the design meets the business requirements. After the conceptual design, you must move on to the logical design to have adequate documentation to help the programmers understand the database structure. It is vital to keep the logical design updated to be independent of the database engine to be used. This way, if you eventually migrate the database to a different engine, the logical design will still be useful. Finally, the physical design can be created by the programmers themselves or by a DBA, taking the logical design and adding all the implementation details needed to implement it on a particular DBMS.

Create and maintain a data dictionary

Even if an ERD is clear and descriptive, you should add a data dictionary to make it even clearer. The data dictionary maintains coherence and consistency in the database design, particularly when the number of objects in it grows significantly. The main purpose of the data dictionary is to maintain a single repository of reference information about the entities of a data model and its attributes. The data dictionary should contain the names of all entities, the names of all attributes, their formats and data types, and a brief description of each. The data dictionary provides a clear and concise guide to all the elements that make up the database. This avoids creating multiple objects that represent the same thing, which makes it difficult to know which object to resort to when you need to query or update information.

Maintain consistent criteria for primary keys

The decision to use natural keys or surrogate keys must be consistent within a data model. If entities in a data model have unique identifiers that can be efficiently managed as primary keys of their respective tables, there is no need to create surrogate keys. But it is common for entities to be identified by multiple attributes of different types – dates, numbers, and/or long strings of characters – which may be inefficient for forming primary keys. In these cases, it’s better to create surrogate keys of integer numeric type, which provide maximum efficiency in index management. And the surrogate key is the only option if an entity lacks attributes that uniquely identify it.

Use the correct data types for each attribute.

Certain data give us the option of choosing which data type to use to represent them. Dates, for example. We can choose to store them in date type fields, date/time type fields, varchar type fields, or even numeric type fields. Another case is numeric data that is not used for mathematical operations but to identify an entity, such as a driver’s license number or a zipcode. In the case of dates, it is convenient to use the engine’s data type, which makes it easier to manipulate data. If you need to store only the date of an event without specifying the time, the data type to choose will be simply Date; if you need to store the date and time when a certain event occurred, the data type should be DateTime. Using other types, such as varchar or numeric, to store dates may be convenient but only in very particular cases. For example, if it is not known in advance in which format a date will be expressed, it is convenient to store it as varchar. If search performance, sorting, or indexing is critical in handling date-type fields, a previous conversion to float can make a difference. Numeric data not involved in mathematical operations should be represented as varchar, applying format validations in the recording to avoid inconsistencies or repetitions. Otherwise, you expose yourself to the risk that some data exceeds the limitations of the numeric fields and forces you to refactor a design when it is already in production.

Use of lookup tables

Some inexperienced designers may believe that the excessive use of lookup tables to normalize a design can complicate the ERD of a database unnecessarily because it adds a large number of “satellite” tables that sometimes do not have more than a handful of elements. Those who think this should understand that the use of lookup tables has many more benefits than disadvantages. If the complexity or size of an ERD is a problem, there are ERD design tools that allow you to visualize the diagrams in different ways to be understood despite their complexity. This sample query illustrates the correct use of lookup tables in a well-designed database: In this case, we are using lookup tables for Cities and States. The benefits of lookup tables include reducing the size of the database, improving search performance, and imposing restrictions on the valid data set a field can contain, among others. It is also good practice for all lookup tables to include a Bit or a Boolean field that indicates whether a record in the table is in use or is obsolete. This field can be used as a filter to avoid obsolete elements as options in the application UI.

Normalize or denormalize according to database type

In relational databases used for traditional applications, normalization is a must. It is well known that normalization reduces the required storage space by avoiding redundancies. It improves the quality of information and provides multiple tools to optimize performance in complex queries. However, in other types of databases, a technique known as denormalization is applied. In dimensional databases, used as data warehouses, denormalization adds certain useful redundant information in the schema tables. Although they seem to be opposite concepts, denormalization does not mean undoing normalization. It is actually an optimization technique applied to a data model after normalizing it to simplify query writing and reporting.

Designing physical models in parts

In a software development project, the database designer presents a large-scale conceptual model to stakeholders, in which no implementation details are shown. In turn, to work with developers, the designer must provide a physical model with all the details of each entity and attribute. However, both models don’t need to be completely created at the beginning of the project. When applying agile methodologies, each developer at the beginning of each development cycle takes one or more user stories to work with during that cycle. The database designer’s job is to provide each developer with a physical submodel that includes only the objects they need for a work unit. At the end of each development cycle, the submodels created during that cycle are merged so that the complete physical model takes shape parallel to the application’s development.

Making good use of views and indexes

Views and indexes are two fundamental tools in database design to improve application performance. The use of views allows handling abstractions that simplify queries, hiding unnecessary table details. In turn, views make query optimization tasks easier for database engines since they allow them to anticipate how data will be obtained and choose the best strategies to deliver query results faster. Indexes can improve the performance of a slow query based on user experience once the database is in production. However, index creation can be done as part of the database design tasks, anticipating the application’s needs. For the creation of indexes, you need to have an approximate idea of the magnitude of each table – in terms of record count – and then create indexes for the bigger tables. To choose the fields to include in an index, you must consider mainly those representing foreign keys and those that will be used as filters in the searches.

When you think that the work is finished, it is time to refactor.

The design of a database can always be improved. When there are no changes to the database due to new requirements or new business needs, it is a good opportunity to carry out refactoring procedures that improve the design. Refactoring means simply that: introducing changes that improve a design without affecting the semantics of the database. There are many refactoring techniques to improve the design of a database that fall beyond the scope of this article, but it is good to know of their existence to use them when needed. Having this list of best practices at hand whenever you need to design a database will allow you to obtain the best results so that the applications always maintain optimal performance in data access.

![]()